Understand Decision Tree Learning

Decision tree learning is a supervised learning algorithm, which can be used for classification as well as regression tasks.

Moreover, it mimics the human’s decision making process and very effective in several applications. In this post you will know that how to construct a decision tree learning model using Gini Index.

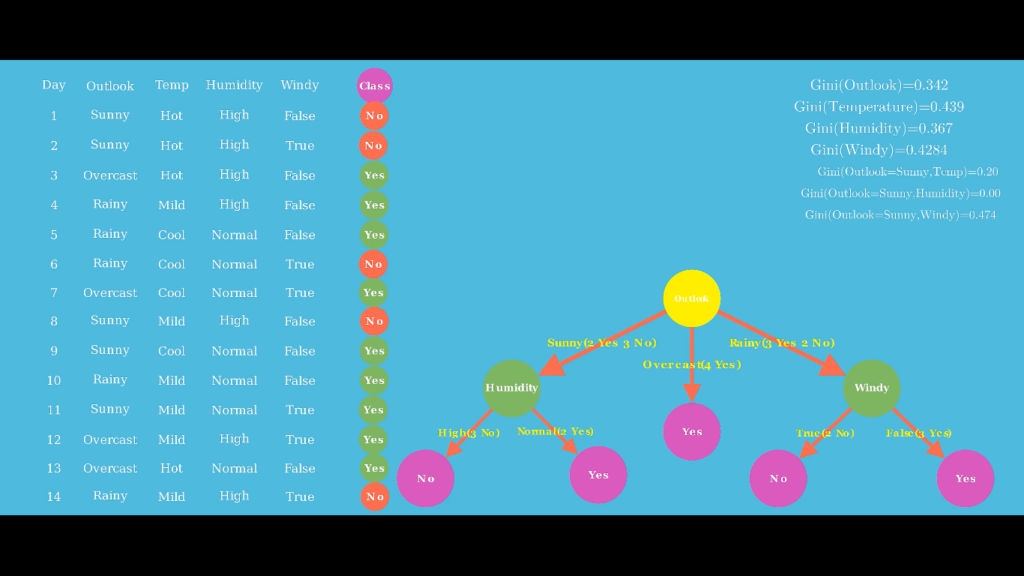

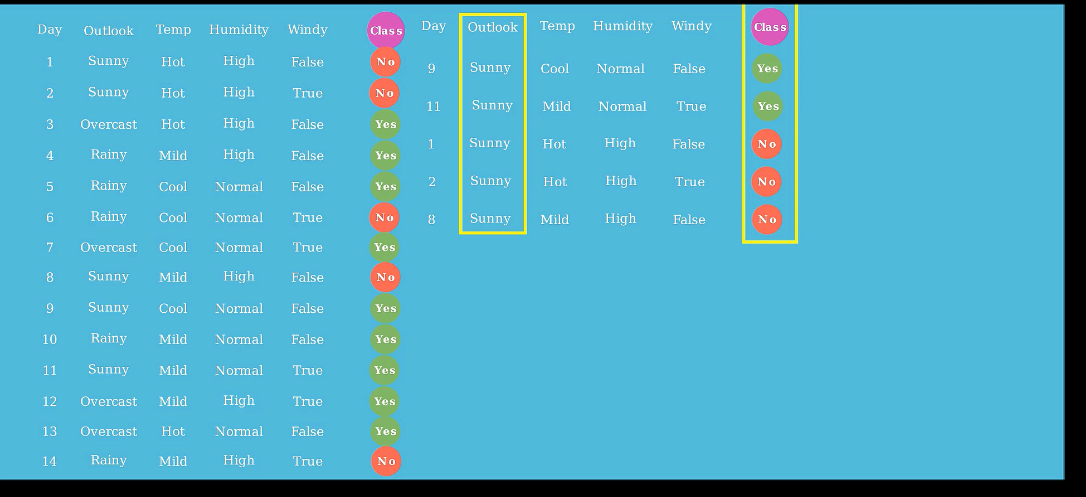

The weather data set I have taken, which has 14 instances, 4 independent features Outlook, Temperature, Humidity and Rainy. Dependent feature or class has two values Yes and No. In addition, Yes class means a player will play tennis, No means a player will not play. In our case supervised learning problem setup is that, based on four features label is assigned that a player will play tennis or not.

The data set has 9 Yes classes and 5 no classes.

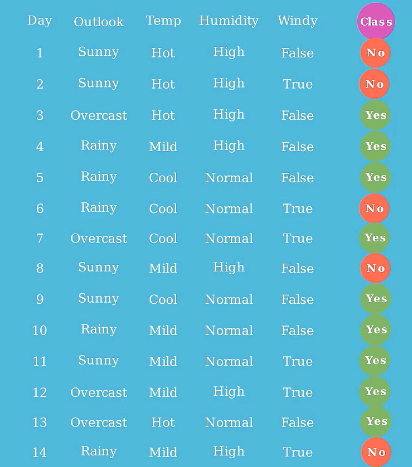

To choose root node we will calculate Gindi index for all features, in below image you can see the formula and explanation, and use of Gini index.

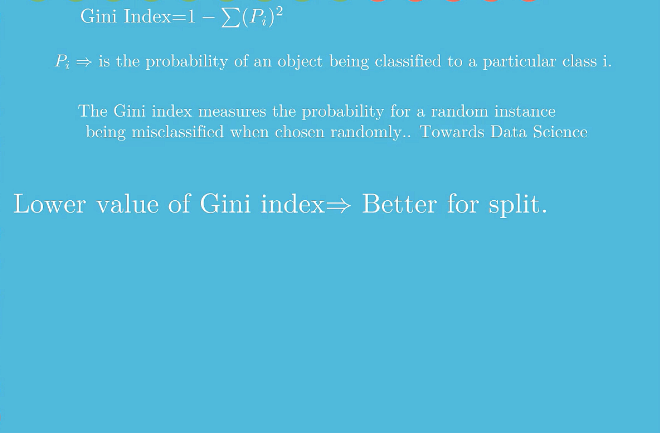

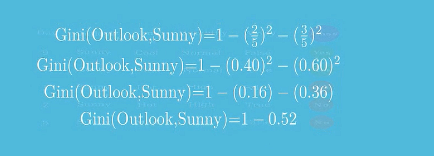

Now let us calculate Gini index for attribute Outlook value Sunny.

If you see the above image for the value of Sunny, 2 classes are Yes, and three classes are No.

So Gini index would be 0.48 (see the image below).

So we have got

Gini(Outlook, Sunny)=0.48

The same way we can calculate

Gini(Outlook, Overcast) which is 0.00 and Gini(Outlook, Rainy)=0.48

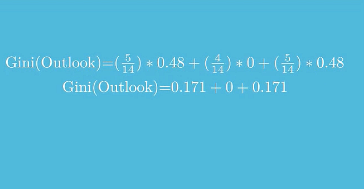

Now we will calculate weighted average of Gini(Outlook, Sunny), Gini(Outlook, Overcast) and Gini(Outlook, Rainy) see the below image.

Then Gini(Outlook ) will be

Gini(Outlook )=0.342

The same way Gini(Temperature), Gini(Humidity), Gini(Windy) will be calculated which are.

Gini(Temperature)=0.439

Gini(Humidity)=0.367

Gini(Windy)=0.4284

i.e all features have the Gini index

Gini(Outlook )=0.342

Gini(Temperature)=0.439

Gini(Humidity)=0.367

Gini(Windy)=0.4284

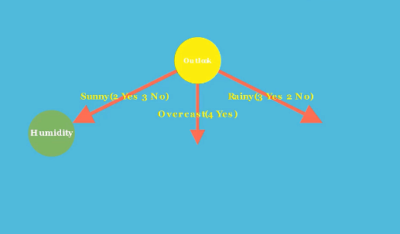

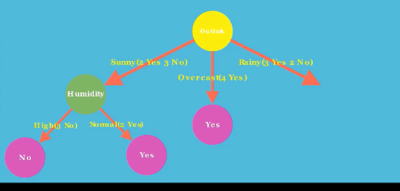

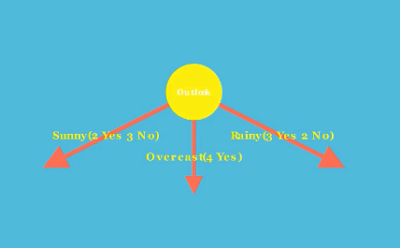

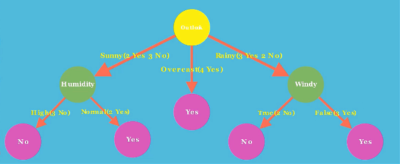

Among all feature Outlook has the lowest value Gini(Outlook )=0.342, so the feature outlook will be the root node and Sunny, Overcast and Rainy would branches(See the image below).

Now we will select sub node, for which we will calculate Gini index of features Temperature, Humidity and Windy given Gini(Outlook=Sunny).

i.e.

Gini(Outlook=Sunny,Temperature)

Gini(Outlook=Sunny,Humidity)

Gini(Outlook=Sunny,Windy)

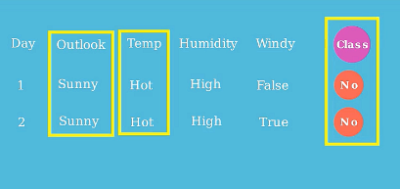

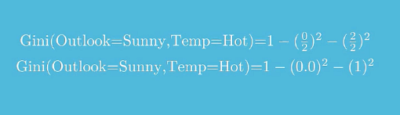

To calculate Gini(Outlook=Sunny,Temperature) first we will calculate Temperature=Hot given Temperature=Hot.

all and it would be.

Gini(Outlook=Sunny,Temperature=Hot)=0.000(See the below image).

Others will be

Gini(Outlook=Sunny,Temperature=Cool)= 0.00 Gini(Outlook=Sunny,Temperature=Hot)=0.50

And finally we will get Gini(Outlook=Sunny,Temperature)=0.20 after taking weighted average of Gini(Outlook=Sunny,Temperature=Hot), Gini(Outlook=Sunny,Temperature=Cool) and Gini(Outlook=Sunny,Temperature=Hot)..

The same way we will calculate Gini(Outlook=Sunny, Humidity) and Gini(Outlook=Sunny, Windy).

Finally, we will get.

Gini(Outlook=Sunny,Temperature)=0.20

Gini(Outlook=Sunny, Humidity)=0.00

Gini(Outlook=Sunny, Windy)=0.474

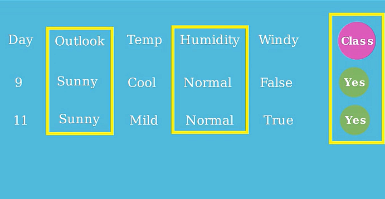

Gini(Outlook=Sunny, Humidity) has the minimum value, then Humidity feature will be below Sunny branch.

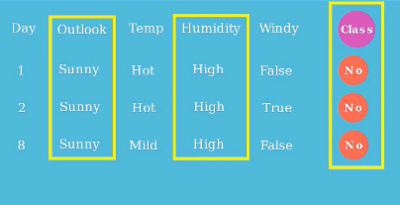

We can see that for Outlook=Sunny and Humidity=High all classes are No .

And

We can see that for Outlook=Sunny and Humidity=Normal all classes are Yes.

Then decision tree will grow, see in the below image.

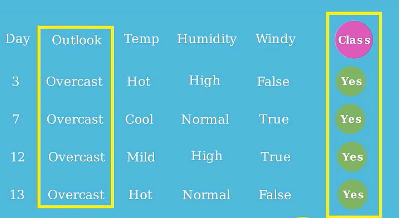

Now we will search for sub node for branch Overcast.

For Outlook=Overcast all classes are Yes, so for for branch Overcast there will be sub node Yes.

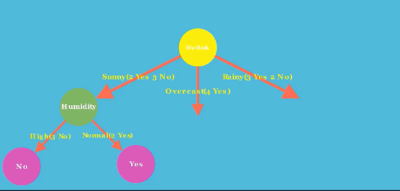

See the tree below.

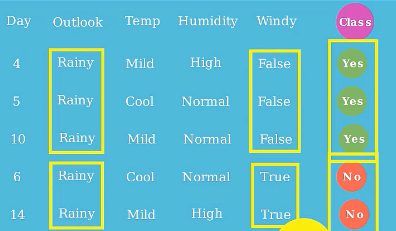

Now let us search sub node for branch Rainy, see the below image.

For Outlook=Rainy and Windy=False all classes are Yes.

For Outlook=Rainy and Windy=True all classes are No.

Then the final tree will be.

The above is the final decision tree.

The above is the final decision tree.

See the video to know all concept in one go.